In our quest to help speed up and automate the process of publishing high-quality open data, we spent a few months listening to the people whose role it was to publish data, and the people creating tools for them. This is what we learned

By Olivier Thereaux

Read the report: What data publishers need: research and recommendations

A few months ago, we published a blog-post introducing some of our work on improving open data publishing. By asking the question: “How can better publishing tools improve the quality, speed and cost-effectiveness of data publishing”, we were hoping to generate novel insights compared to the traditional approach of focusing more on people finding and using open data. Our research

The discovery phase of our research, lasting for most of the summer and early autumn of 2017, aimed to help us understand:

- Who are the main actors in the open data publishing landscape?

- What pain points do data publishers face when publishing data?

- What are the potential solutions to these pain points?

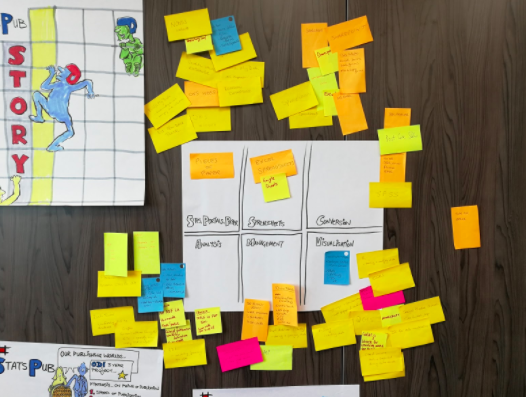

Discovery involved mainly two activities: an audit of existing data publishing tools, and user research.

The audit looked at more than 30 different tools: who makes them, who they are for, what type of data they are used for, what business model they operate on, whether they’re mature tools or not, etc.

This audit helped us understand whether there was any gaping hole in the current tools landscape (answer: not really, but the devil is in the detail), or whether there was any obvious issue with the ecosystem.

We want this research to be helpful to others too, so we have made our Publishing Tools Register public, and are now planning to work with the open data community to make it more than yet-another-tools-list: something truly useful and sustainable.

The user-research focused on understanding data publishers – via workshops and interviews – talking to them directly and to the companies that build the tools they use. Altogether, we talked to more than 60 people, and integrated insights from distinct research conducted by project partners in Northern Ireland also working on creating personas for data publishers.

While our research was mostly done in the UK and may not, as a result, reflect the context and attitudes of people publishing open data worldwide, we found that most of the people we talked to had very similar needs, unmet for similar reasons, and for which solutions are also fairly consistent.

We wrote up our analysis of those needs, problems and solutions in a synthesis research report: ‘What data publishers need: synthesis of user-research’.

The report should already be an interesting read for those in our community building tools and creating processes for open data publishing – and should also be of interest to publishers recognising their own pain in the experience of others in the open data world.

Our intent, in the near future, is to maintain this as a living document: we will update it as our knowledge of the needs and issues gets refined, but we will also use it as a record of our work to help fix those issues, and our attempts at measuring our impact.

Key findings

So what exactly did we learn?

In a nutshell, we learnt three things:

- There is a rich landscape of publishing tools, but poor integration and workflows between them.

- Other than complaints about process, red tape, decision making and strategy, the top cause of concern is data quality

- There is a lack of community support, especially for novice publishers How can we help?

In our publisher needs report we identified eight key solutions to the problems faced by data publishers.

The ODI is already addressing a handful of them through a number of projects and initiatives:

- Help organisations define and implement their open data vision and strategy, and use it to inform their decision making

- Improve data literacy

- Demonstrate the impact of open data

- Encourage dissemination of knowledge and experience in the domain

- Organise community of experts and practitioners

However, we did recognise a need to increase our efforts on:

- better community support for novice publishers,

- better tools integrations and workflows, and

- better data quality tools to increase confidence in publishing.

Informing our publishing tools roadmap

We therefore resolved for these three areas to be a focus for at least the remainder of the first year of our R&D programme.

We know that fostering better community support for novice publishers (1) is a significant challenge, and one that we will not meet in only a few months. Our contribution towards this goal will start, quite humbly, by iterating on our tools register. We plan to involve the community and make the register the basis for an easy to navigate, interactive tools guide.

Creating better tools integrations and workflows (2) is not something we can do on our own. We have therefore awarded one of our major contracts to build open source publishing tools towards the OKI Frictionless Data initiative, with a major focus on improving workflows between various tools in the frictionless data ecosystem.

Closer to home, we are now reviewing the roadmap for Octopub, one of the tools explored and developed as part of the ODI Labs programme, with an objective to make it respond better to the needs of open data publishers, including a focus on connecting it to a number of other tools and platforms.

There are already a number of tools which publishers can use to check, or improve, the quality of their data.

The ODI maintains a number of them, notably CSVlint. Knowing that one of the key needs of publishers is for an increased confidence in their data through better data quality tools (3), we have decided to also dedicate some of our effort towards these existing tools: part of the funding for the frictionless data project is earmarked to improve the GoodTables continuous data validation tool, especially its user experience.

Finally, another of our contracts for data publishing tools development has been awarded towards the development of a new system for data validation and cleanup, codenamed Lintol. As a service and open source platform, Lintol will integrate a variety of libraries for validation of different types of data, and offer data publishers and managers in organisations a high level of control over their quality targets.

Report: What data publishers need: research and recommendations